Elastic Kubernetes Service, Istio IngressGateway and ALB - Health Checking

In a previous article we took a look at the very unwieldy integration of the Istio IngressGateway with an AWS Application Load Balancer, however we didn’t look at any Health Check options to monitor the the ALB via it’s Target Group. A dig around the usual forums suggests that this is confusing a lot of people and it threw me the first time I looked. In post we’ll have a quick look at how to get a Target Group Health Check properly configured on our ALB and why it doesn’t work by default in the first place.

Unhealthy By Default?

As a quick reminder, our ALB_s work in this deployment by forwarding requests to a _Target Group (which contains all the EC2 Instances making up our EKS Data Plane). Using a combination of the aws-load-balancer-controller and normal Kubernetes Ingresses this can all be created and managed dynamically as we covered in the previous article.

For a recap, our ALB is deployed using the below Ingress configuration:

#--ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: istio-ingress

namespace: istio-system

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internal

alb.ingress.kubernetes.io/subnets: subnet-12345678, subnet-09876543

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS": 443}]'

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-west-1:123456789012:certificate/b1f931cb-de10-43af-bfa6-a9a12e12b4c7 #--ARN of ACM Certificate

alb.ingress.kubernetes.io/backend-protocol: HTTPS

spec:

tls:

- hosts:

- "cluster.tinfoil.private"

- "*.cluster.tinfoil.private"

secretName: aws-load-balancer-tls

rules:

- host: "cluster.tinfoil.private"

http:

paths:

- path: /*

backend:

serviceName: istio-ingressgateway

servicePort: 443

- host: "*.cluster.tinfoil.private"

http:

paths:

- path: /*

backend:

serviceName: istio-ingressgateway

servicePort: 443

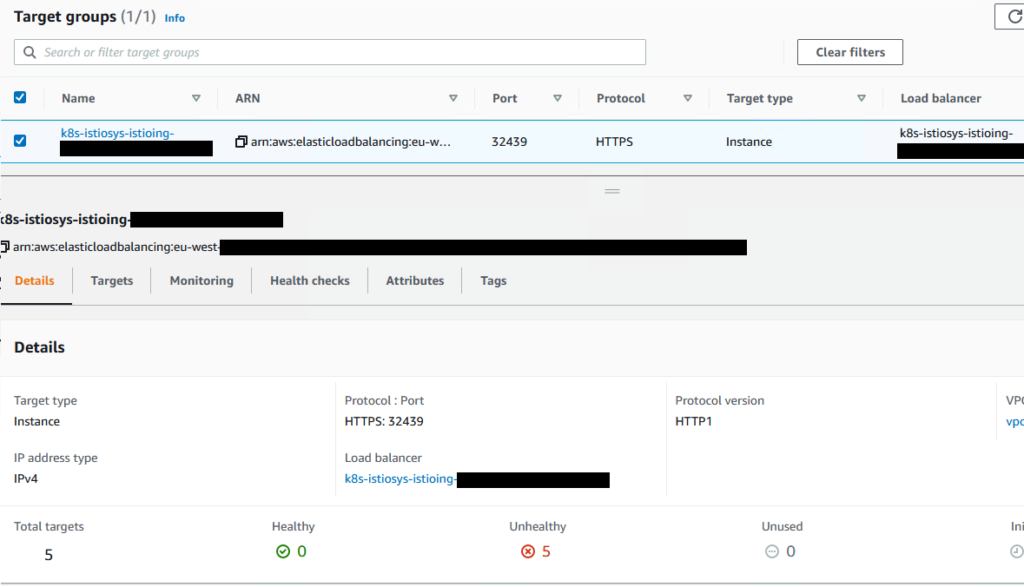

With this default configuration, our Target Group’s Health Check will be misconfigured, with all Instances reporting as Unhealthy:

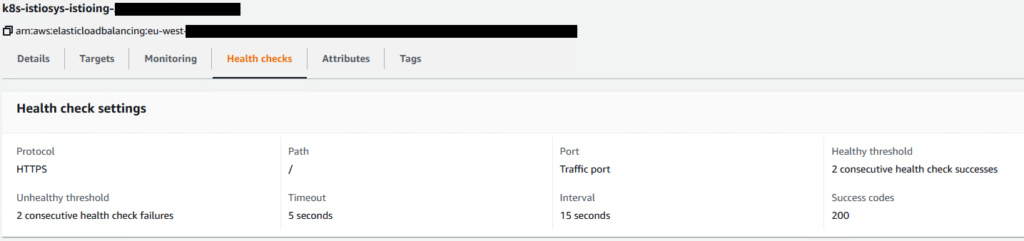

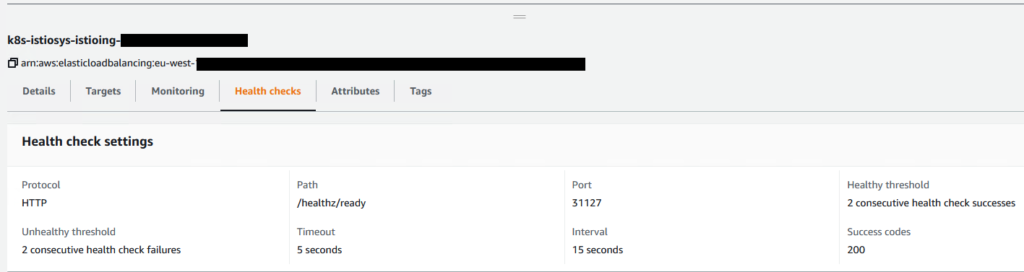

We know they really are available so, what’s the problem? Let’s take a look at how the Health Check is configured out of the gate:

What’s going on, exactly?

Understanding the Architecture

It’s important to realise when working with NodePorts,_ as we are in this scenario, that the TCP ports are being exposed via each of our Data Plane (or Worker) nodes themselves and then routing traffic to the the relevant Service (in our case the Istio IngressGateway). These NodePorts are the very ports that the inside of our ALB needs to connect to.

As our Nodes are EC2 instances, our architecture looks something like this:

In the above example NodePort represents the specific TCP port number of the Istio IngressGateway’s traffic-port, which will forward traffic to TCP port 443 on the IngressGateway Service.

But all this is a little theoretical, how to do we actually get the Health Check to work?

Gathering the Health Check Details

The Istio IngressGateway is serving several ports. The traffic-port that we mentioned earlier is currently being used for Health Checks by default, but this port is being using to serve content only and the Health Check endpoint is not available on this port . The IngressGateway has a separate status-port for this very purpose.

So let’s gather the correct NodePort to run a Health Check against:

kubectl get svc istio-ingressgateway -n istio-system -o json | jq .spec.ports[]

{

"name": "status-port",

"nodePort": 31127,

"port": 15021,

"protocol": "TCP",

"targetPort": 15021

}

#--http, https and tls ports omitted for brevity

…and the endpoint that Kubernetes actually offers us as a Readiness Probe that we can run a Health Check against:

kubectl describe deployment istio-ingressgateway -n istio-system

Name: istio-ingressgateway

Namespace: istio-system

Containers:

...

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=1s period=2s #success=1 #failure=30

...

...

#--Most output omitted for brevity

From this we can see that the proper endpoint we need to send out Health Check to is /healthz/ready using TCP port 31127 over HTTP. This will map to TCP/15021 within the istio-ingressgateway Pod and tell us if the IngressGateway is still available.

Be aware that this NodePort value is dynamic, be sure to look up your own and don’t just copy and paste from here! This value will also change on re-deployment and this should be taken in to consideration when automating deployments.

Missing Annotations

As we briefly mentioned in the previously article, the list of annotations for ALB Ingress is not widely documented. But a comprehensive list is available here. A couple more of these need to be explicitly set in order to make the Health Check work. We can complete these with the information we’ve already looked up:

#--ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: istio-ingress

namespace: istio-system

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internal #--internet-facing for public

alb.ingress.kubernetes.io/subnets: subnet-12345678, subnet-09876543

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS": 443}]'

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:eu-west-1:123456789012:certificate/b1f931cb-de10-43af-bfa6-a9a12e12b4c7 #--ARN of ACM Certificate

alb.ingress.kubernetes.io/backend-protocol: HTTPS

#--ADDITIONAL ANNOTATIONS BELOW

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP #--HTTPS by default

alb.ingress.kubernetes.io/healthcheck-port: "31127" #--traffic-port by default

alb.ingress.kubernetes.io/healthcheck-path: "/healthz/ready" #--/ by default

spec:

...

Does It Work?

kubectl apply -f ingress.yaml

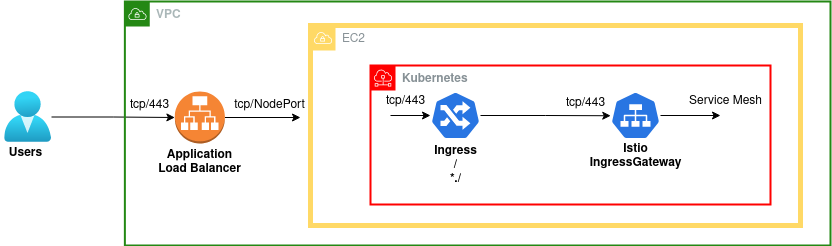

We can see now that these settings have applied to the ALB:

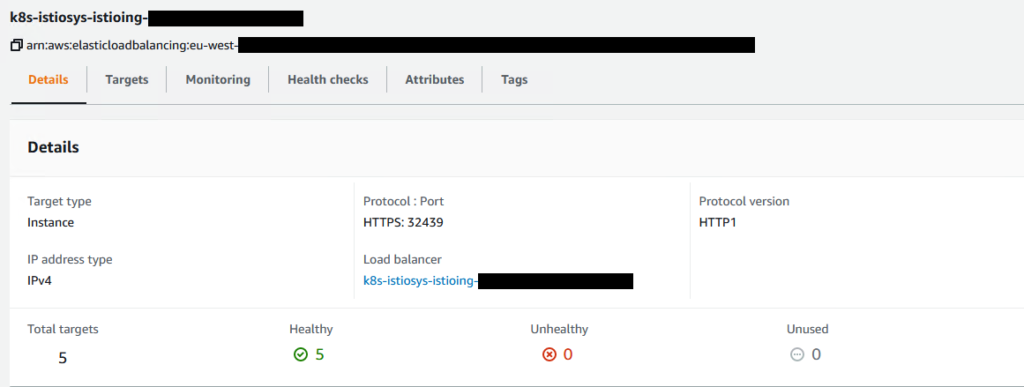

…and that the Health Check is now showing a happy load balancer:

Simple!